Use LLamaIndex Workflow to Create an Ink Painting Style Image Generation Workflow

Add strong artistic flair through fine control of LLM context

In today's article, I'll help you build a workflow that can generate ink illustrations with strong Eastern style. This workflow also allows for multiple rounds of prompt adjustments and final image tweaks, helping save on token and time costs.

You can find the source code for this project at the end of the article.

Introduction

Recently I wanted to create an agent workflow that could quickly generate images for my blog at low cost.

I wanted my blog images to have strong artistic flair and classical Eastern charm. So I hoped my workflow could precisely control the LLM context and continuously adjust the prompts for drawing as well as the final image effects, while keeping token and time costs to a minimum.

Then I immediately faced a dilemma:

If I chose low-code platforms like dify or n8n, I wouldn't get enough flexibility. These platforms can't support adjusting prompts in conversations or generating blog images according to article styles.

If I chose popular agent development frameworks like LangGraph or CrewAI, these frameworks are too high-level in abstraction, preventing fine control over the execution process of agent applications.

If you were faced with this task, how would you choose?

Fortunately, the world isn't black and white. After countless failures and continuous attempts, I finally found a great solution: LLamaIndex Workflow.

It provides an efficient workflow development process while not abstracting too much from my agent execution process, allowing the image generation program to run precisely as I require.

Today, let me use the latest LlamaIndex Workflow 1.0 version to build a workflow for generating ink painting style illustrations for you.

Why should you care?

In today's article, I will:

- Guide you to learn the basic usage of LlamaIndex Workflow through project practice.

- Use chainlit to build a chatbot interface where you can visually see the generated images.

- Leverage deepseek to generate more project-appropriate drawing prompts outside of the DALL-E-3 model.

- Use multi-turn conversations to make further adjustments to the generated prompts or the final generated images.

- Optimize costs at the token and time level through fine control of the LLM context.

Ultimately, you only need a simple description to draw a beautiful ink painting style image.

More importantly, through practicing this project, you will gain a preliminary understanding of how we use workflows to complete complex customized requirement development in enterprise-level agent applications.

If you need some prerequisite knowledge, I wrote an article explaining in detail the event-driven architecture of LlamaIndex Workflow. You can read it by clicking here:

Business Process Design

For the development of agent workflow type applications, I strongly recommend designing the business process flow before starting coding. This helps you grasp the entire program operation process.

In this chapter, I will demonstrate my complete design thinking for the business process flow of this project:

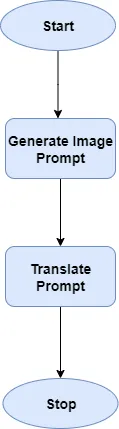

Prompt generation process

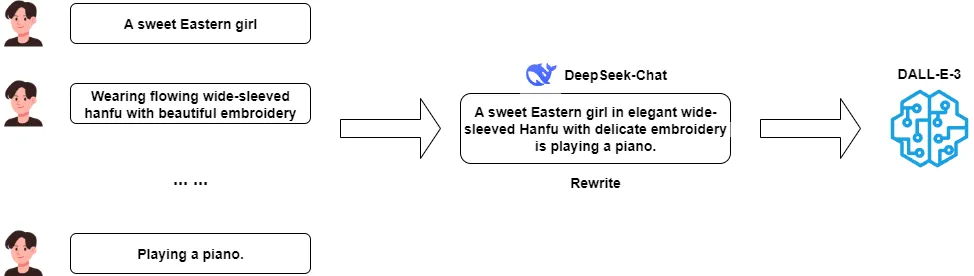

In today's project, I'm using DALL-E-3 for drawing. DALL-E-3 itself has the ability to rewrite user intentions into detailed prompts suitable for drawing.

But our requirements are higher. We want DALL-E-3 to draw the picture exactly as I imagine. So we will move the process of rewriting user intentions into detailed prompts from the DALL-E-3 model to our own workflow node.

Since today's theme is to draw beautiful ink painting style illustrations, I need to select an LLM that can fully understand the Eastern ambiance in user intentions and expand it into a drawing prompt that DALL-E-3 can understand. Here I chose the DeepSeek-Chat model.

Since DeepSeek has been prompted to generate DALL-E-3 drawing prompts, the generated prompts are in pure English.

If you are proficient in English, then this step can be ended here. But if you, like me, are a non-native English speaker and want to accurately understand the content of the generated prompts, you can add a translation node. This step is not troublesome.

Finally, the generated prompts and their translations will be returned to the user through LlamaIndex Workflow's StopEvent.

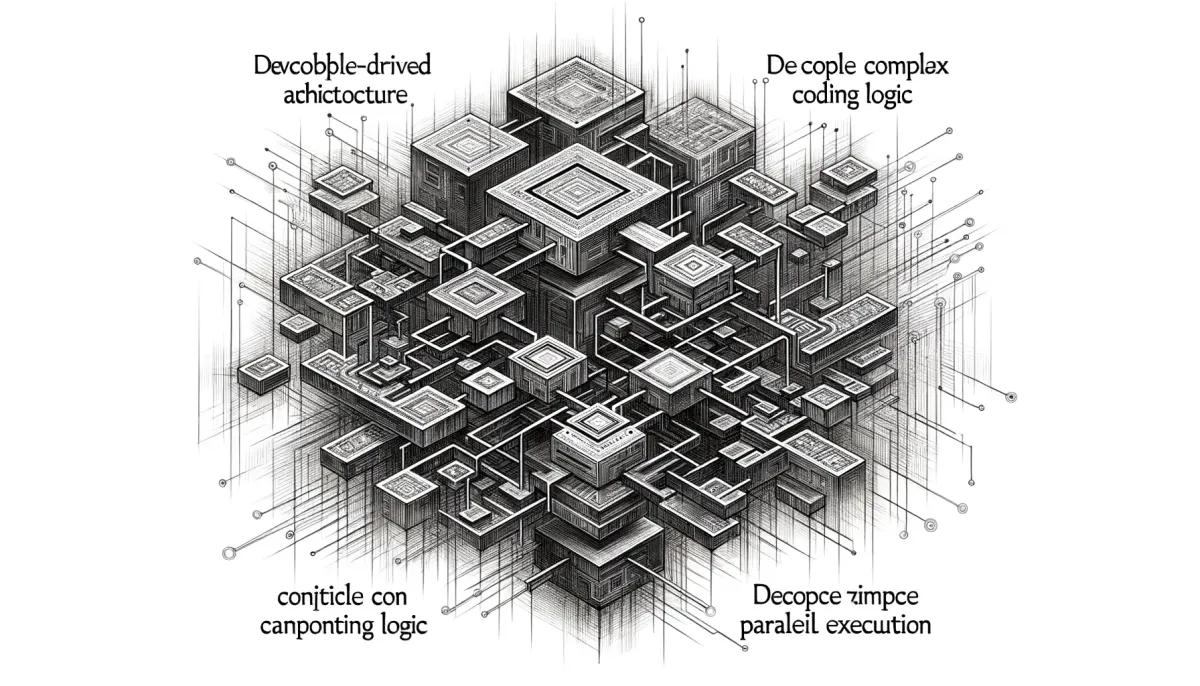

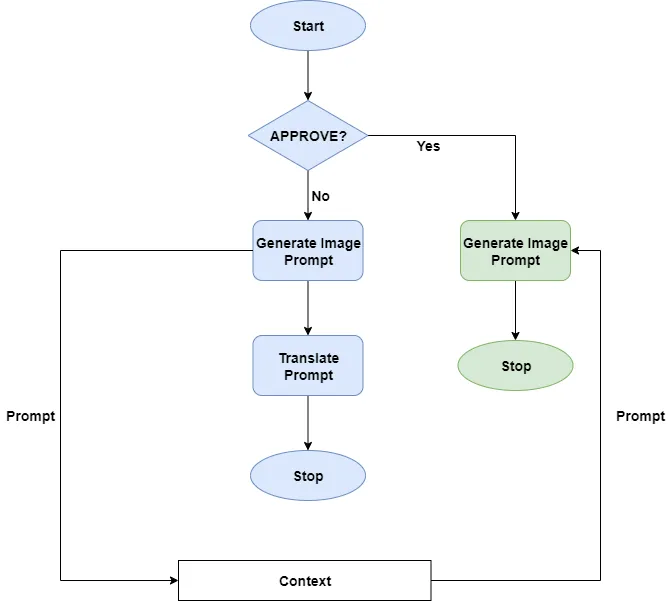

Using context sharing to decouple workflow loops

After generating the prompt, next we either provide the prompt to DALL-E to generate the image or return to let DeepSeek readjust the prompt again.

At this point, you must be thinking of adding user feedback and workflow iteration features. Based on the final generated image effect, provide modification suggestions and ask the workflow to regenerate the prompt, looping until satisfied output is obtained.

Since we need to support user feedback after both prompt generation and image generation nodes, this loop will greatly increase the complexity of the workflow.

But in today's project, I plan to use a small trick to significantly simplify the implementation of the workflow.

Instead of adding user feedback after prompt or image generation to determine whether to regenerate the prompt, we add an if-else node at the very beginning of the workflow to judge whether the user input contains specific keywords (here APPROVE, you can replace it with your own). If it does contain, go to the image generation branch; if not, go to the prompt generation branch.

This way, each iteration is a re-execution of the workflow, thus decoupling the workflow from the iteration.

After adding the branch node, we will face a problem: the image generation branch doesn't know what the prompt generated from the previous run was. And I don't want to save all the message history from the last run because the message history also includes translations of the prompt and other information that doesn't need to be sent to the LLM.

At this point, the best choice is to let all runs of the workflow use the same context and save the generated prompt into the context.

Fortunately, LlamaIndex Workflow supports sharing the same context across multiple runs, so we can add logic to save variables into the Workflow Context.

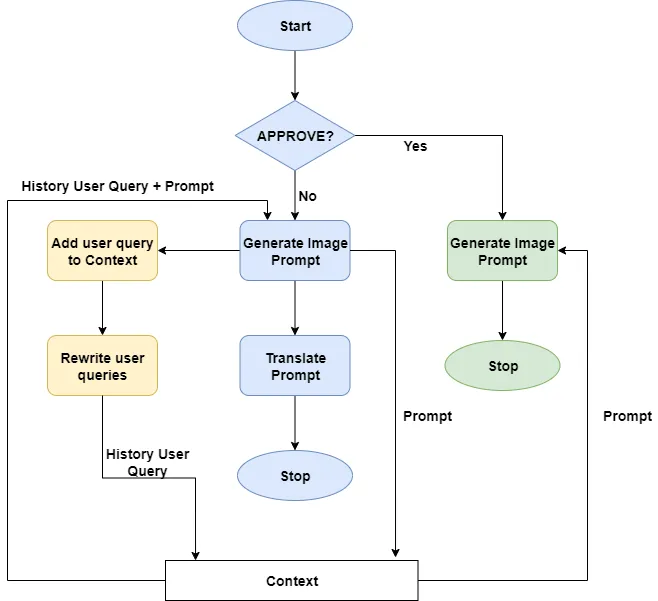

Rewrite user's historical drawing requests

Since users will gradually adjust the prompts generated by the LLM through multiple conversations, we need to provide the LLM with the complete conversation history.

The common approach is to use messages with role as user and role as assistant to save and provide the historical conversations between users and the LLM to DeepSeek.

But doing this, as the adjustments continue, the conversation messages will become longer and longer, causing the LLM to ignore truly important key information.

We can't adopt the method of truncating historical information and only keeping the recent few rounds of conversations. Because the most detailed drawing intention is usually provided in the user's initial request.

So here I will take the approach of rewriting the user's request history, rewriting the user's multiple rounds of adjustments to the prompt and image into a complete request.

The specific method is: create a list container in the Context, when generating the prompt, append the user's latest input into this list. Then call DeepSeek to rewrite all user inputs into a complete drawing description and store it in the Context.

Improve system prompt

Finally, we modify the node where DeepSeek generates drawing prompts. Before instructing the LLM to generate prompts, retrieve the rewritten user historical requests and the last generated drawing prompt from the Context, and merge them into the system prompt.

At the very first execution of the workflow, the user's historical requests and the last generated prompt in the Context are empty. Still, it doesn't matter because the latest user requests are always provided to the LLM with role as user message.

Thus, the complete business process diagram is designed, as shown in the figure below.

Next, we can start coding according to the design of the business process diagram.

Develop Your Drawing Workflow Using LlamaIndex Workflow

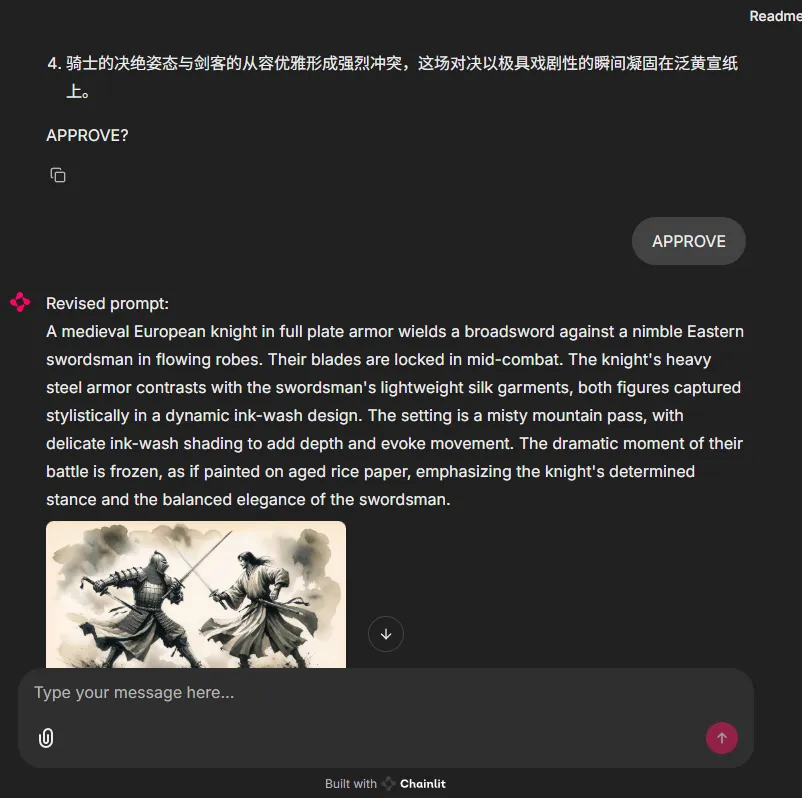

In today's project, in addition to using LlamaIndex Workflow to build the drawing workflow, I will also utilize Chainlit to create an interactive interface, facilitating interaction with the workflow application.

The dialogue interface is shown in the figure below:

Next, let's start writing the actual code logic.

💡 Unlock Full Access for Free!

Subscribe now to read this article and get instant access to all exclusive member content + join our data science community discussions.