I Used Autogen GraphFlow and Qwen3 Coder to Solve Math Problems — And It Worked

More reliable than your math professor

In this tutorial today, I will show you how I used Autogen’s latest GraphFlow with the Qwen3 Coder model to accurately solve all kinds of math problems — from elementary school level to advanced college math.

As always, I put the source code for this tutorial at the end of the article. You can read it anytime.

Course Introduction

You must have secretly thought about using an LLM to do your homework. I had that idea from the very first day LLMs appeared, let them solve math problems for me.

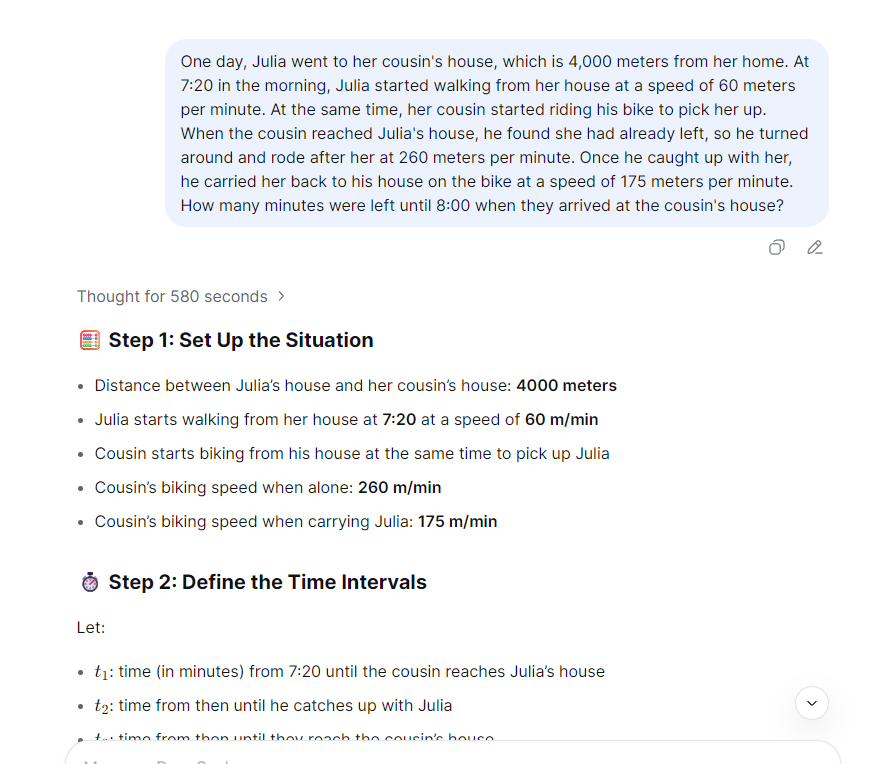

But dreams are sweet, reality is tough. If I throw a math problem at an LLM, it either gets the answer wrong or fails to reason at all. Here is what happened when I gave a math problem to the latest DeepSeek V3.1:

Not really the LLM’s fault. By design, LLMs are token generation models. Even if they can solve math problems, it is based on patterns learned during training, not actual numerical computation.

No worries. Even if we cannot ask LLMs to solve math problems directly, we can ask them to generate code that solves the problem. That is something LLMs do very well.

For example, I built a multi-agent app using Autogen GraphFlow and Qwen3 Coder that can accurately solve math problems from elementary school to university level:

The trick is simple: Qwen3 Coder writes Python code to solve the problem. A Docker Executor runs the code and gets the result. Then the app writes the final answer.

Fun right? Want to learn how? Don’t rush. In this lesson, I will walk you through building this multi-agent app step by step.

What Will I Learn?

In this lesson, you will learn:

- How to set up a Docker Executor agent that can run Python code.

- How to build an Autogen GraphFlow workflow using multiple atomic agent nodes to work together on complex tasks.

- Using a reasoning agent to help the workflow plan how to solve hard problems.

- How to use a reflection agent to let the workflow review and improve its own code and results.

The best part is I used small models like qwen3-30b and qwen3-coder-plus. And The final result beats large language models like DeepSeek v3.1 in numerical problem solving.

Atomic Capability Agents

In enterprise applications, the latest trend is using multiple atomic agents, each does one simple task. When you arrange them cleverly, they can handle complex jobs. This gives you two big wins:

- Each agent has one clear job. That means fewer mistakes and less hallucination.

- You don’t need to write long, confusing prompts. Just tell the agent what to do in simple words.

In this tutorial, I will show you how this approach gives your enterprise AI applications a real edge.

Ready? Buckle up. Let’s go.

Pre-Class Prep

Before we start, we need to get the environment ready.

Yes, the Python code generated by Qwen can run in your local virtual environment. But to keep your system safe from bad code, I recommend using Docker to create a dedicated runtime.

There are many guides on installing Docker, so I will skip that part. Today, I will just show you how to prepare the Docker image.

Here is the Dockerfile, used for the container in this tutorial:

FROM python:3.13-slim-bookworm

WORKDIR /app

ENV PYTHONDONTWRITEBYTECODE=1 \

PYTHONUNBUFFERED=1 \

PIP_NO_CACHE_DIR=1

COPY requirements_docker.txt requirements.txt

RUN pip install --no-cache-dir --upgrade pip && \

pip install --no-cache-dir -r requirements.txt --upgradeOne special thing: we need to install some math packages in this Python runtime. That helps the agent solve all kinds of math problems better. I put all dependencies into requirements.txt:

numpy==2.3.0

pandas==2.3.2

sympy==1.12

scipy==1.16.1You probably already thought of this: if I can install math packages, I can also install packages for other subjects. Just say the word.

You need to build the Python runtime container into an image ahead of time:

docker build -t python-docker-env .Also, to make the Docker Executor work, you need to install Autogen’s Docker extension into your project’s virtual environment.

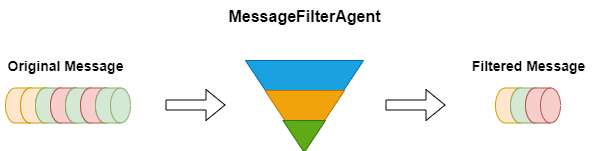

pip install -U autogen-ext[docker]Unlike other workflow frameworks, Autogen GraphFlow can filter messages. It lets you choose which messages go to the next node. That saves tokens and reduces hallucination.

If you want to trace message inputs and outputs at each node, you can use MLFlow. I wrote a whole article about that. We will use it soon.

Now let’s start the lesson.

Ding Ding — Class Begins

Set Up the LLM Clients

In today’s multi-agent application, we will use two Qwen3 models.

The main one is qwen3-coder-plus. I will use this model to generate Python code that solves problems.

The other model is qwen3-30b-a3b-instruct-2507, It is a MoE model — small but smart. I use it in the workflow for thinking and code review.

This model cannot do deep reasoning, but it saves us lots of tokens and time. I can also build a separate reasoning agent to handle problem-solving logic. This setup is flexible and works well. I will prove that to you soon.

To make Autogen work with Qwen3 models, I prepared an OpenAILike client for you. You can click here to learn more.

I want the qwen3-30b model to think through solutions more carefully, so I lowered the temperature to 0.1:

slm_client = OpenAILikeChatCompletionClient(

model="qwen3-30b-a3b-instruct-2507",

temperature=0.1

)I want the qwen3-coder model to be precise and consistent. So I set its temperature to 0.01:

coder_client = OpenAILikeChatCompletionClient(

model="qwen3-coder-plus",

temperature=0.01

)Prepare the Docker Executor

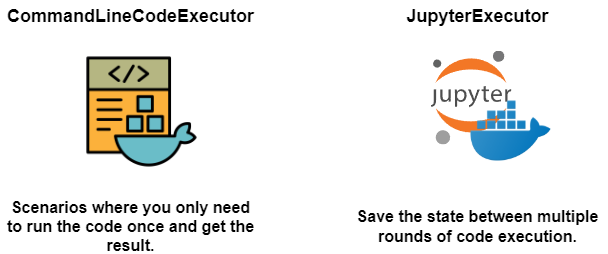

Autogen’s Code Executor comes in two types. CommandLineCodeExecutor, runs Python code once then exits. JupyterExecutor keeps state between multiple code runs.

For today’s task, DockerCommandLineCodeExecutor is enough.

But after many real project tests, a stateful Python runtime based on Jupyter is much better for agents to solve complex tasks by exploring on their own:

We will build it using the image we prepared earlier. Since some data science code takes time to run, I suggest you set a longer timeout:

docker_executor = DockerCommandLineCodeExecutor(

image="python-docker-env",

timeout=300,

)The Docker Executor container removes itself after the agent finishes. If you want to keep it for debugging, set auto_remove to False.

I am not sure if Autogen’s Docker Executor has a bug. If you want to mount your host file system into the container, use the extra_volumes parameter. Avoid the workspace folder inside the container.

docker_executor = DockerCommandLineCodeExecutor(

image="python-docker-env",

timeout=3000,

extra_volumes={str(Path("./data").resolve()): {"bind": "/data", "mode": "rw"}},

)Write Your Agents

To keep things clean, I put agents and their prompts in separate files: agents.py and prompts.py.

This project has three core agents: coder agent to generate code exe_agent to run the code and reviewer agent to check code and results.

The coder agent uses Autogen’s AssistantAgent. Its job is to understand the user’s question, plan a solution, and write executable Python code. It uses the qwen3-coder-plus model:

coder = AssistantAgent(

"coder",

model_client=coder_client,

system_message=PROMPT_CODER

)Here is its prompt:

PROMPT_CODER = dedent("""

## Role

You are a developer engineer responsible for writing Python code.

## Task

For the user's question, you will generate a piece of **Python** code that runs correctly and gives the right result.

### Requirements

- **Write the code logic strictly following thinker's problem-solving approach.**

- Use the print function to show the result.

- Short code comments.

- Only output the code block, no extra words or explanations.

- When showing results, if it's a float, keep two decimal places.

### Available libraries

- numpy, pandas, sympy, numexpr, scipy

""")I used a popular structured prompt method. Markdown syntax and simple language make the prompt clean and easy — almost like a programming language.

I also told the agent which third-party packages it can use. This is important. Knowing what tools are available helps the agent do better work and avoid mistakes.

The exe_agent is an instance of CodeExecutorAgent. It is just a wrapper around the Docker Executor. Simple:

exe_agent = CodeExecutorAgent(

"exe_agent",

code_executor=docker_executor,

)The reviewer agent is also an AssistantAgent but uses the qwen3-30b model. Its job is to check the code and results from the coder. If it passes, the reviewer writes the answer. If it fails, the reviewer gives feedback:

reviewer = AssistantAgent(

"reviewer",

model_client=slm_client,

system_message=PROMPT_REVIEWER,

)Here is the reviewer’s prompt:

PROMPT_REVIEWER = dedent("""

## Role

You are a test engineer.

## Task

- Check if the Python code follows the problem-solving approach proposed by thinker.

- Verify if the code runs correctly and produces results.

- Provide the review results.

### Review Passed

"COOL"

### Review Failed

- "REJECT"

- Output the exact error message from exe_agent.

- Give brief suggestions for improving the code.

- Don't include any introductions or explanations.

- Do not provide the revised code.

""")Again, I used simple structured text. I asked the agent to output different keywords for pass or fail.

There is a problem here: Earlier, I said we want atomic agents that each do one job. But coder and reviewer are clearly doing multiple things. That is because I wanted to test if using LLMs to generate code for math problems even works. Once that is proven, I will refactor each agent’s prompt to make their jobs more focused.

Build a Quick Prototype to Test the Idea

Time to build a quick prototype to show the boss that this idea works.

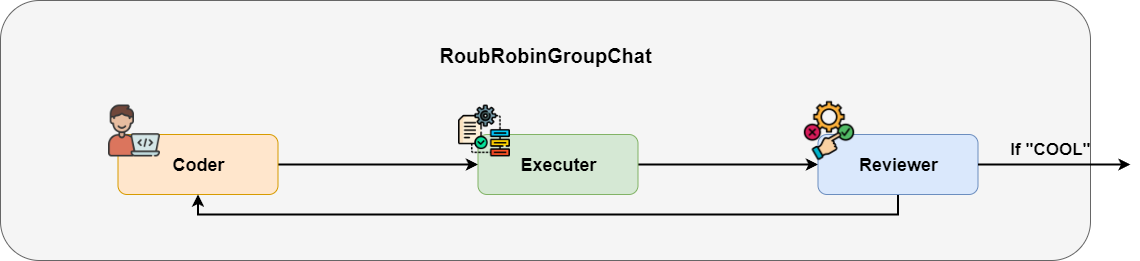

In the prototype stage, I don’t want to work late building a complex Autogen GraphFlow. I want to solve it in one line of code. In Autogen, the simplest way to run agents in a loop is RoundRobinGroupChat.

RoundRobinGroupChat is an infinite loop of agents. It only stops when it hits a termination condition.

I set a condition to stop when the message contains “COOL”. Then I put all agents into the RoundRobinGroupChat in order.

text_termination = TextMentionTermination("COOL")

team = RoundRobinGroupChat([coder, exe_agent, reviewer],

termination_condition=text_termination)Let’s test it with a math problem:

async def main():

task = "The bag has 4 black balls and 1 white ball. Every time, you pick one ball at random and swap it for a black ball. Keep going, and figure out the chance of pulling a black ball on the third try."

async with docker_executor:

await Console(team.run_stream(task=task))

asyncio.run(main())One thing to remember: when you run agents with Docker Executor, you need to manage the container’s start and stop. The best way is to use async with.

Looks good. The agent understood the question, wrote Python code, and got the result. The reviewer checked it and wrote the answer.

Spot Problems in the Prototype and Plan Improvements

As a quick prototype, RoundRobinGroupChat is enough to prove the idea works. But if you put this code into production, you will find it unstable and inaccurate.

Before turning this into production code, we need to identify what is wrong and how to fix it.

Try the prototype a few times. For simple problems it works. For more challenging math problems, the coder writes incorrect code.

Why? Because the coder has to both plan the solution and write the code. For hard problems, it cannot think of a good plan, so the code logic fails.

The reviewer has the same issue. It checks the code, writes the answer if it passes, or gives feedback if it fails.

It is too busy. It mixes all jobs. The answer often includes code comments. That is hard for students who just want to learn how to solve the problem, not read Python code.

Remember our agent design rule? In a multi-agent system, each agent should do one atomic job. That makes the whole system perform better.

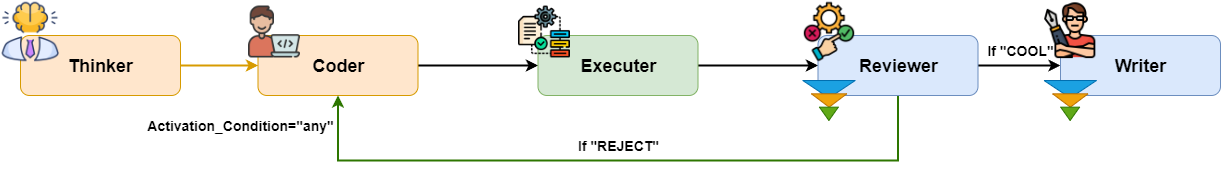

So our next step is clear. We need to split the jobs of coder and reviewer. Let coder only write code. Let reviewer only check if the code passes or fails.

We also need two new agents:

The thinker agent acts as the brain of the system. It uses CoT thinking to plan the solution. The coder follows the thinker’s plan exactly — no more thinking.

The writer agent writes the solution and answer in student-friendly language based on the correct code and result. The reviewer no longer writes answers, it only checks code.

Here is the new workflow:

As you can see, thinker and writer are outside the loop. RoundRobinGroupChat won’t work anymore. We need a more complex workflow. Ladies and gentlemen, please welcome Autogen GraphFlow.

Prepare the New Agents

Before coding the GraphFlow, we need to add two new agents as planned.

First, the thinker agent. Even though it is the brain, it still uses the qwen3-30b, model. We only need it to plan the solution:

thinker = AssistantAgent(

"thinker",

model_client=slm_client,

system_message=PROMPT_THINKER,

)Its prompt is simple and structured:

PROMPT_THINKER = dedent("""

## Role

You are a college professor who is good at breaking down complex problems into clear steps for solving them.

## Task

For the user's question, break it down into a problem-solving approach that can be calculated step by step using code.

## Response

Use an ordered list to show the steps.

### Requirements

- Do not include actual code.

- Do not solve the problem.

- Do not do any numerical calculations.

""")Second, the writer agent. It also uses the qwen3-30b. It writes the answer based on correct code and results. I will show you later how to pick the correct code:

writer = AssistantAgent(

"writer",

model_client=slm_client,

system_message=PROMPT_WRITER,

model_client_stream=True

)Here is the writer’s prompt:

PROMPT_WRITER=dedent("""

## Role

You're a college professor who's really good at explaining problem-solving ideas and answers in a way students can easily understand.

## Task

Based on the user's question, use the logic of the Python code and the result of code execution to write the answer.

## Answer Content

The answer should include the problem-solving idea and the final answer.

### Style

- Use natural language that humans can understand.

- **Don't include any code**.

### Note

- **Use the execution result from exe_agent as the answer**.

- The problem-solving idea must match the logic in the coder's code.

- You can't come up with your own problem-solving idea.

""")With both agents ready, we can now build the final agent workflow using Autogen GraphFlow.

What Is Autogen GraphFlow

When people talk about Autogen, they say its biggest difference from LangGraph or CrewAI is that Autogen lets LLMs choose which agent to use to plan and complete tasks. So Autogen feels more suited for research.

But for enterprise applications, we often want consistent and robust code execution. So we prefer to pre-arrange how tasks flow between agents using workflows.

Autogen’s team saw this too. In a recent version, they released GraphFlow— their workflow coding framework.

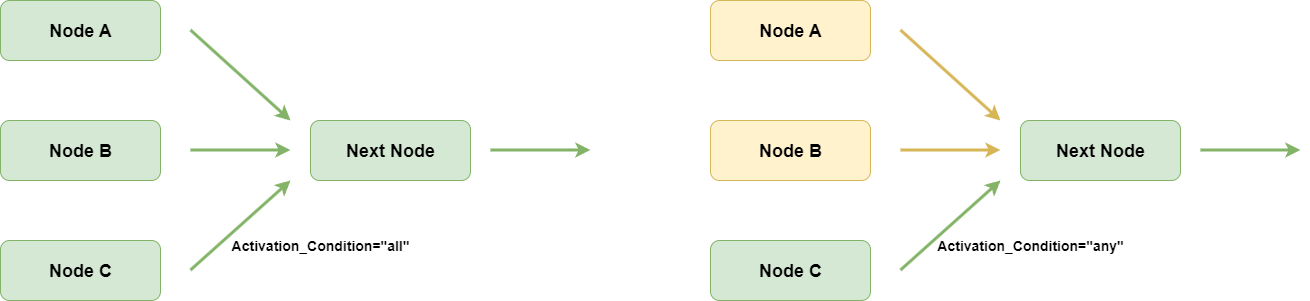

Compared to other workflow frameworks, GraphFlow has two special features:

It can filter messages going into a node. Only the messages the LLM needs to know are kept. That reduces hallucination.

It can group edges going into a node. You can set the node to run after all edges finish or just after some edges finish. That lets you build complex branches or loops.

In today’s application, we need both features. When code fails, the reviewer sends feedback to the coder. When code passes, the writer only reads the correct code, no noise from wrong code.

In the end, we'll have a multi-agent app with a chat interface, and it can perfectly answer your math questions:

Let’s begin.

Message Filtering and Edge Grouping

All agents are ready. Now we just need to focus on building the GraphFlow.

💡 Unlock Full Access for Free!

Subscribe now to read this article and get instant access to all exclusive member content + join our data science community discussions.