Build AutoGen Agents with Qwen3: Structured Output & Thinking Mode

Save yourself 40 hours of trial and error

This article will walk you through integrating AutoGen with Qwen3, including how to enable structured output for Qwen3 in AutoGen and manage Qwen3's thinking mode capabilities.

If you're in a hurry for the solution, you can skip the "how-to" sections and jump straight to the end, where I've shared all the source code. Feel free to use and modify it without asking for permission.

Autogen has stopped updating, so I’ve also prepared a Microsoft Agent Framework version of the solution for you. Click here to learn more:

Introduction

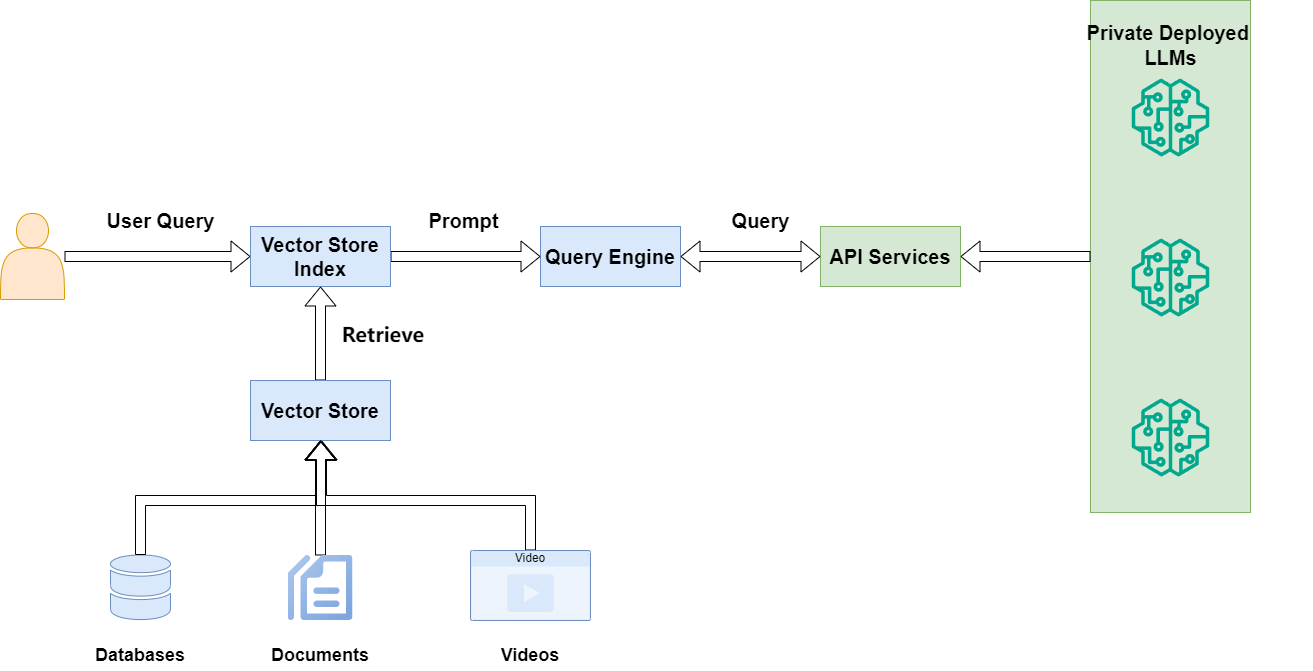

As enterprises begin deploying Qwen3 models, corresponding agent frameworks must adapt to fully utilize Qwen3's capabilities.

For the past two months, my team and I have been working on a large-scale project using AutoGen. Like LlamaIndex Workflow, this event-driven agent framework allows our agents to integrate better with enterprise message pipelines, leveraging the full power of our data processing architecture.

If you're also interested in LlamaIndex Workflow, I've written two articles about it:

We've spent considerable time with Qwen3, experimenting with various approaches and even consulting directly with the Qwen team on certain options.

I'm confident this article will help you, even if you're not using Qwen series models or AutoGen specifically. The problem-solving approaches are universal, so you'll save significant time.

Why should I care?

There's an old Chinese saying: "A craftsman must first sharpen his tools."

To fully benefit from the latest technology's performance improvements and development conveniences, integrating models into existing systems is the first step.

This article will cover:

- Creating an OpenAI-like client that lets AutoGen connect to Qwen3 via OpenAI API.

- Exploring AutoGen's structured output implementation and alternative approaches, ultimately adding structured output support for Qwen3.

- Supporting Qwen3's

extra_bodyparameters in AutoGen by controlling the thinking mode toggle. - Finally, we'll put these lessons into practice with an article-summarizing agent project.

Let's begin!

Don’t forget to follow my blog to stay updated on my latest progress in AI application practices.

Step 1: Building an OpenAILike Client

Testing the official OpenAI client

We know both Qwen and DeepSeek models support OpenAI API calls. AutoGen provides a OpenAIChatCompletionClient class for GPT series models.

Can we use it to connect to public cloud or privately deployed Qwen3 models?

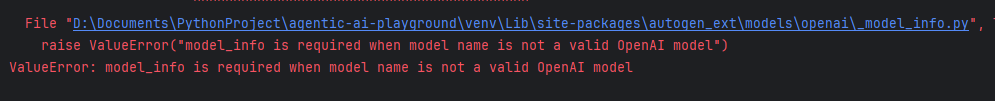

Unfortunately not. When we tried:

original_model_client = OpenAIChatCompletionClient(

model="qwen-plus-latest",

base_url=os.getenv("OPENAI_BASE_URL")

)

agent = AssistantAgent(

name="assistant",

model_client=original_model_client,

system_message="You are a helpful assistant."

)We encountered an error:

Checking the _model_client.py file reveals that OpenAIChatCompletionClient only supports GPT series models, plus some Gemini and Claude models - no promises for others.

But this isn't new. Remember when the OpenAI client last restricted model types? Exactly - LlamaIndex's OpenAI client had similar limitations, but the community provided an OpenAILike client as a workaround.

Our solution here is similar: we'll build an OpenAILike client supporting Qwen (and DeepSeek) series models.

Trying the model_info parameter

Checking the API docs reveals a mode_info parameter: "Required if the model name is not a valid OpenAI model."

So OpenAIChatCompletionClient can support non-OpenAI models if we provide the model's own mode_info.

For Qwen3 models on public cloud, qwen-plus-lastest and qwen-turbo-latest are the newest. I'll demonstrate with qwen-plus-latest:

original_model_client = OpenAIChatCompletionClient(

model="qwen-plus-latest",

base_url=os.getenv("OPENAI_BASE_URL"),

model_info={

"vision": False,

"function_calling": True,

"json_output": True,

"family": 'qwen',

"structured_output": True,

"multiple_system_messages": False,

}

)

...

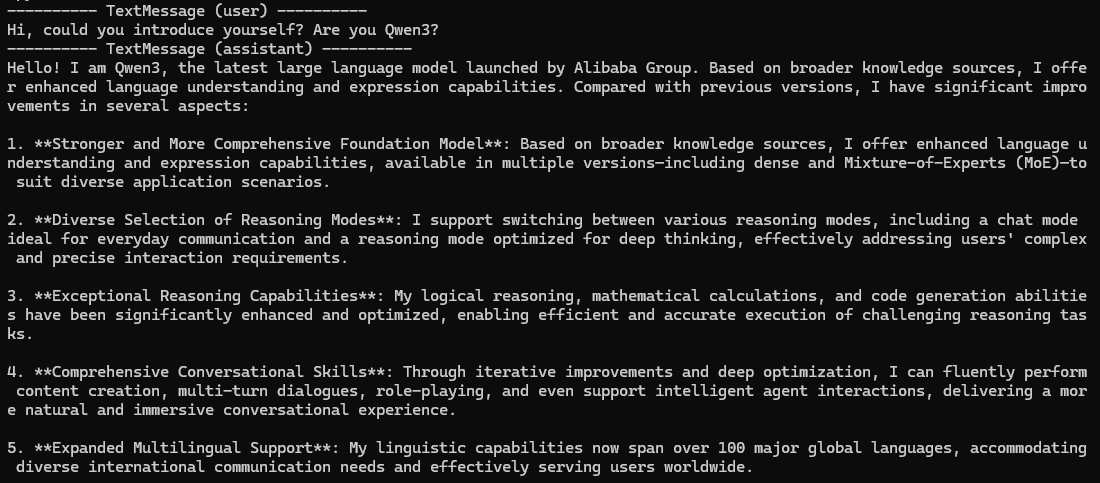

async def main():

await Console(

agent.run_stream(task="Hi, could you introduce yourself? Are you Qwen3?")

)

The model connects successfully and generates content normally. But this creates new headaches - I don't want to copy-paste model_info constantly, nor do I care about its various options.

Solution? Time to implement our own OpenAILike that encapsulates this information.

Implementing OpenAILikeChatCompletionClient

We'll implement this through inheritance. The code lives in utils/openai_like.py.

By inheriting from OpenAIChatCompletionClient, we'll automate model_info handling.

First, we compile all potential models' model_info into a dict, with a default model_info for unlisted models.

_MODEL_INFO: dict[str, dict] = {

...

"qwen-plus-latest": {

"vision": False,

"function_calling": True,

"json_output": True,

"family": ModelFamily.QWEN,

"structured_output": True,

"context_window": 128_000,

"multiple_system_messages": False,

},

...

}

DEFAULT_MODEL_INFO = {

"vision": False,

"function_calling": True,

"json_output": True,

"family": ModelFamily.QWEN,

"structured_output": True,

"context_window": 32_000,

"multiple_system_messages": False,

}In __init __, we check if users provided model_info. If not, we look up the model parameter in our config, falling back to default if missing.

Since OpenAIChatCompletionClient requires users to provide base_url, we've optimized this too: if missing, we'll pull from OPENAI_BASE_URL or OPENAI_API_BASE environment variables.

Our final __init __ method looks like:

class OpenAILikeChatCompletionClient(OpenAIChatCompletionClient):

def __init__(self, **kwargs):

self.model = kwargs.get("model", "qwen-max")

if "model_info" not in kwargs:

kwargs["model_info"] = _MODEL_INFO.get(self.model, DEFAULT_MODEL_INFO)

if "base_url" not in kwargs:

kwargs["base_url"] = os.getenv("OPENAI_BASE_URL") or os.getenv("OPENAI_API_BASE")

super().__init__(**kwargs)Let's test our OpenAILikeChatCompletionClient:

model_client = OpenAILikeChatCompletionClient(

model="qwen-plus-latest"

)

agent = AssistantAgent(

name="assistant",

model_client=model_client,

system_message="You are a helpful assistant."

)Perfect! Just specify the model and we're ready to use the latest Qwen3.

Step 2: Supporting structured_output

Structured_output specifies a pydantic BaseModel-derived class as standard output. This provides consistent, predictable output formats for more precise agent messaging.

For enterprise applications using frameworks and models, structured_output is essential.

AutoGen's structured_output implementation

AutoGen supports structured_output - just implement a pydantic BaseModel class and pass it via output_content_type to AssistantAgent.

The agent's response then becomes a StructuredMessage containing structured output.

Let's test Qwen3's structured_output capability.

Following official examples, we'll create a sentiment analysis agent. First, define a data class:

class AgentResponse(BaseModel):

thoughts: str

response: Literal["happy", "sad", "neutral"]Then pass this class to the agent via output_content_type:

structured_output_agent = AssistantAgent(

name="structured_output_agent",

model_client=model_client,

system_message="Categorize the input as happy, sad, or neutral following json format.",

output_content_type=AgentResponse

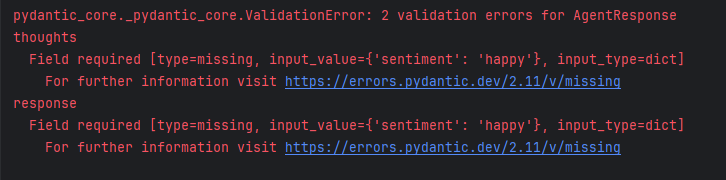

)Running this agent produces an error because the model's JSON output doesn't match our class definition, suggesting the model didn't receive our parameters:

Why? Does Qwen not support structured_output? To answer, we need to understand AutoGen's structured_output implementation.

When working directly with LLMs, structured_output typically adjusts the chat completion API's response_format parameter.

Having modified OpenAIChatCompletionClient earlier, we check its code for structured_output references and find this comment:

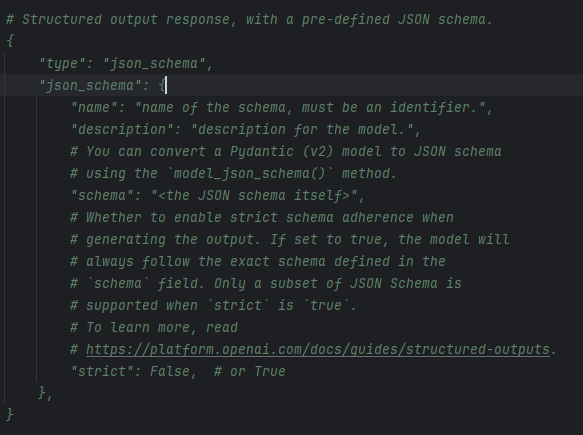

This suggests that with structured_output, OpenAI client's response_format parameter is set to:

{

"type": "json_schema",

"json_schema": {

"name": "name of the schema, must be an identifier.",

"description": "description for the model.",

"schema": "<the JSON schema itself>",

"strict": False, # or True

},

}But Qwen3's documentation shows its response_format only supports {"type": "text"} and {"type": "json_object"}, not {"type": "json_schema"}.

Does this mean Qwen3 can't do structured_output?

Not necessarily. The essence of structured_output is getting the model to output JSON matching our schema. Without response_format, we have other solutions.

Implementing structured_output via function calling

Returning to Python's nature: in Python, all classes are callable objects like functions, including pydantic BaseModel classes.

Can we leverage this with LLM function calling for structured_output? Absolutely - by treating data classes as special functions.

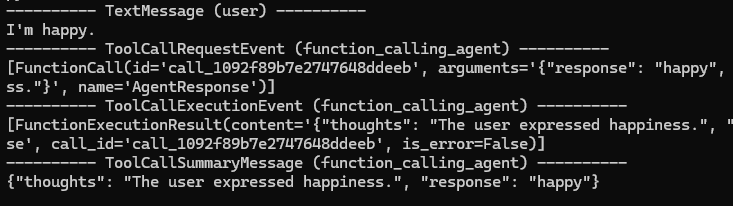

Let's modify our agent. Instead of output_content_type, we'll use tools parameter, passing AgentResponse as a tool. The model will then call this tool for output:

function_calling_agent = AssistantAgent(

name="function_calling_agent",

model_client=model_client,

system_message="Categorize the input as happy, sad, or neutral following json format.",

tools=[AgentResponse],

)Results:

The model outputs JSON matching our class's schema perfectly. This works!

However, with multiple tools, the agent sometimes ignores the data class tool, outputting freely.

Is there a more stable approach? Let's think deeper: structured_output's essence is getting JSON matching our schema. Can we leverage that directly?

Making the model output according to json_schema

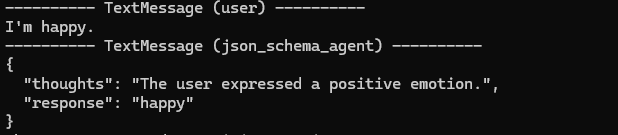

Having the model output according to json_schema is entirely feasible.

AutoGen previously used response_format's json_schema, but we can also specify the schema directly in system_prompt:

json_schema_agent = AssistantAgent(

name="json_schema_agent",

model_client=model_client,

system_message=dedent(f"""

Categorize the input as happy, sad, or neutral,

And follow the JSON format defined by the following JSON schema:

{AgentResponse.model_json_schema()}

""")

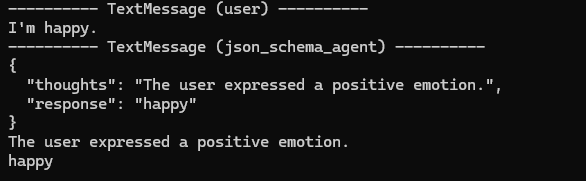

)Results:

The agent outputs JSON matching our schema. Understanding the principles makes structured_output implementation straightforward.

We can further convert JSON output back to our data class for code processing:

result = await Console(json_schema_agent.run_stream(task="I'm happy."))

structured_result = AgentResponse.model_validate_json(

result.messages[-1].content

)

print(structured_result.thoughts)

print(structured_result.response)

Perfect.

But specifying json_schema in system_prompt is cumbersome. Can we make Qwen3 agents support output_content_type directly?

Making Qwen3 support AutoGen's output_content_type parameter

Our ultimate goal is framework-level Qwen3 support for structured_output via output_content_type, without changing AutoGen usage.

💡 Unlock Full Access for Free!

Subscribe now to read this article and get instant access to all exclusive member content + join our data science community discussions.